How to Master Admission Webhooks In Kubernetes (GKE) (Part One)

I title it “Mastering”, but to be honest, I am still a fair way to getting there. There is still so much to learn and so much to worry about, essentially because the number of resource manifests your admission webhook sees can have a significant blast radius when things are not going well. Cluster control plane could go for a ride if the webhook intercepts too much and misbehaves by either denying requests it shouldn’t or slows down because it can’t cope. And this is to do with just validation webhooks, it gets trickier when you start to modify the resource manifests using the mutation webhooks.

What are admission webhooks? “Admission webhooks are HTTP callbacks that receive admission requests (for resources in a K8s cluster) and do something with them. You can define two types of admission webhooks, validating admission webhook and mutating admission webhook. Mutating webhooks are invoked first, and can modify objects sent to the API server to enforce custom defaults. After all object modifications are complete, and after the incoming object is validated by the API server, validating webhooks are invoked and can reject requests to enforce custom policies.”

I must say I was very fortunate to get a comprehensive head start on Kubernetes Admission Webhooks by Marton Sereg from Banzai Cloud. I strongly recommend reading that before carrying on here, if you are just getting started on this journey and want to keep it short and sweet initially. The article covers right from concepts and definitions, to development in “Go” to a neat in-cluster deployment using certificates issued by cluster CA.

Now, let’s dive into the “learnings galore” from my journey so far.

Make a pragmatic implementation choice

So people will tell you about initiatives like gatekeeper and claim these to make life easy, but really speaking, you have to make a choice between

- learning a totally new domain specific language and then possibly end up discovering that either its too restrictive or naive to debug

- choose one of the languages your development teams commonly use, making it a pragmatic choice for maintenance, even though you yourself hate it to begin with. Which Node.js was in my case 😉

I am thinking the long term strategy could be to leverage the best of both worlds, so do the generic and simple ones using either an existing template from the open-policy-agent library or creating your own in rego, and BYO others which have complex or business specific logic in generic but light-weight language.

Minimize the blast radius

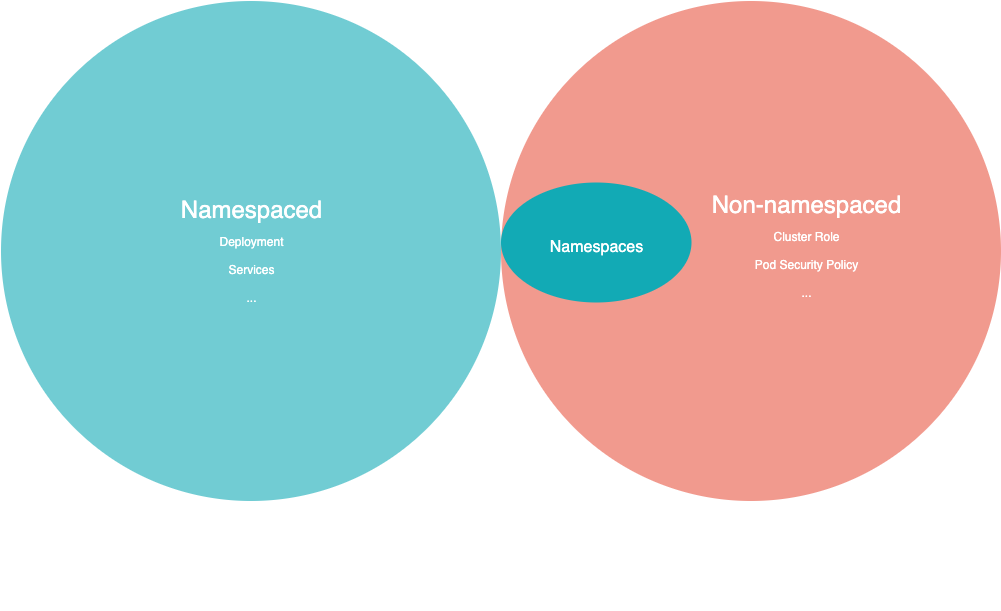

Our most complex use-case so far is (CI)CD driven. On our GKE clusters, we want to restrict groups to do CRUD operations to their “owned” namespaces. e.g. only people in the pipeline team should be allowed to do CRUD to application namespaces i.e. namespaces matching pattern ^apps-.+. But the GCP IAM does not let you fine-tune a permission and applies cluster-wide. Understandably. Meaning, for instance, if you grant your Cloud Identity group pipeline@digizoo.io “Kubernetes Engine Admin”, that provides the lucky few access to full management of the cluster and Kubernetes API objects. That’s it. No security control beyond that. To achieve what we desired, we had to implement a ValidatingWebhookConfiguration which would observe all operations i.e. CREATE, UPDATE, DELETE to “all” the relevant manifests. A trick we did to NOT simply intercept “all” the manifests, but only those specific to the requirement at hand, was to extract the following two subsets from the universe of “all” manifests; all Namespaced resources and all non-namespaced resources which are Namespace operations.

---

# Source: validation-admission-webhook-addon/templates/validatingwebhook.yaml

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

name: myrelease-validation-admission-webhook-addon-namespaces-identities

labels:

app: myrelease-validation-admission-webhook-addon

webhooks:

- name: myrelease-validation-admission-webhook-addon-namespaces-identities.digizoo.io

clientConfig:

service:

name: myservice

namespace: mynamespace

path: "/validate/namespaces/identities"

caBundle: mycacertbundle

rules:

- operations: [ "CREATE", "UPDATE", "DELETE" ]

apiGroups: ["*"]

apiVersions: ["*"]

resources: ["*/*"]

scope: "Namespaced"

failurePolicy: Ignore

---

# Source: validation-admission-webhook-addon/templates/validatingwebhook.yaml

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

name: myrelease-validation-admission-webhook-addon-namespaces

labels:

app: myrelease-validation-admission-webhook-addon

webhooks:

- name: myrelease-validation-admission-webhook-addon-namespaces.digizoo.io

clientConfig:

service:

name: myservice

namespace: mynamespace

path: "/validate/namespaces/identities"

caBundle: mycacertbundle

rules:

- operations: [ "CREATE", "UPDATE", "DELETE" ]

apiGroups: ["*"]

apiVersions: ["*"]

resources: ["namespaces"]

failurePolicy: Ignore

Deploy All-in-One

Over time, once you get addicted to solving all kube problems under the sun using webhooks, you will have many. Some simple, others hard. But, from an operations perspective, it’s best to bundle them up into one container image. So you could use something like Node.js express routes to expose each of your many implementations. Referring to the above snippet, our namespaces restriction webhook is available at “/validate/namespaces/identities”.

Test-Test-Test

Given the blast radius, it is easy to understand why you may need to unit test your code for a lot of scenarios. Else you will have a frustratingly slow and repetitive development and test loop. For this reason, I started to appreciate Node.js for the admission webhooks use-case, where it makes it agile for a quick feedback iterative loop of dev and test. What we have ended up is adding lots of positive and negative unit tests for all the anticipated scenarios. And whenever we run into a new unhandled scenario, its fairly quick to try a patch and re-test.

Err on the “safe” side, and “alert” enough

In the early stages of the deployment of the webhook in lower environments, we configure it with safe fallback options. So, when it comes across an identity it is not configured for, or a manifest which it cannot handle, the configuration allows for it to err on the safe side, and “let” the request in. But, equally importantly, code generates INFO logs for those scenarios, so that we can alert and hence action on them. We also have cheat codes to exempt the god, system or rogue unseen identities or bypass it all for potentially when the cluster goes belly up.

{

"bypass": false,

"defaultMode": "allow",

"exemptedMembers": ["superadmin@digizoo.io", "cluster-autoscaler"],

"identitiesToNamespacesMapping": [

{

"identity": "gcp-pipeline-team@digizoo.io",

"namespaces": [

"^app-.+"

]

}

]

}

Fail Safe if required

When the hook fails to provide a decision, so either an unhandled scenario, bug in the implementation, or timeouts when the API server is unable to call the webhook, if the blast radius if your webhook is significantly large, it’s a wise idea to fail safe. So a soft “Ignore” failure policy instead of a hard “Fail”. But if you choose to “Ignore”, you also need a good alerting mechanism to deal with those failures and not create a security hole. The way we have dealt with this is to monitor the webhook service. We run a Kubernetes cronjob to poll the service every minute and report it if it cannot. Be careful to choose the right parameters for your cronjob like “concurrencyPolicy: Forbid” and “restartPolicy: Never” and then monitor this monitor using Kubernetes Events published to Stackdriver. This tends to happen in our non-production deployments once a day when our preemptible nodes are recycled and the service takes a minute or two to realise the pod is gone. (TODO: There should be a better way to fail fast with GCP preemptible node termination handlers). (TODO: For defense-in-depth, it would be good to also monitor the API server logs for failures getting the service to respond but, at the moment, Google is still working on access to master logs).

Make it available “enough” with the “right” replicas

It is important to make sure your webhook service is available enough, with either of fail-open or fail-close options above. Towards this, we are using pod anti-affinity with 3 replicas across the GCP zones. (TODO: we plan to leverage guaranteed scheduling pod annotations once its available to us outside of the “kube-system” namespace in cluster versions ≥ 1.17).

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "validation-admission-webhook.fullname" . }}

labels:

{{ include "validation-admission-webhook.labels" . | indent 4 }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app.kubernetes.io/name: {{ include "validation-admission-webhook.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

template:

metadata:

labels:

app.kubernetes.io/name: {{ include "validation-admission-webhook.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

annotations:

checksum/config: {{ include (print $.Chart.Name "/templates/configmap.yaml") . | sha256sum }}

spec:

securityContext:

runAsUser: 65535

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

containers:

- name: {{ .Chart.Name }}

image: "{{ required "A valid image.repository entry required!" .Values.image.repository }}:{{ required "A valid image.tag entry required!" .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

command: ["node", "app.js", "{{ .Values.webhook_routes }}"]

env:

- name: DEBUG

value: "{{ default "0" .Values.webhook_debug }}"

ports:

- name: https

containerPort: 443

protocol: TCP

securityContext:

capabilities:

add:

- NET_BIND_SERVICE

livenessProbe:

httpGet:

path: /healthz

port: 443

scheme: HTTPS

readinessProbe:

httpGet:

path: /healthz

port: 443

scheme: HTTPS

resources:

{{- toYaml .Values.resources | nindent 12 }}

volumeMounts:

- name: webhook-certs

mountPath: /etc/webhook/certs

readOnly: true

- name: webhook-config

mountPath: /usr/src/app/config

readOnly: true

volumes:

- name: webhook-certs

secret:

secretName: {{ include "validation-admission-webhook.fullname" . }}-certs

- name: webhook-config

configMap:

defaultMode: 420

name: {{ include "validation-admission-webhook.fullname" . }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- {{ include "validation-admission-webhook.name" . }}

topologyKey: "topology.kubernetes.io/zone"

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

A word about GKE Firewalling

By default, firewall rules restrict the cluster master communication to nodes only on ports 443 (HTTPS) and 10250 (kubelet). Additionally, GKE by default enables “enable-aggregator-routing” option, which makes the master to bypass the service and communicate straight to the POD. Hence either make sure to expose your webhook service and deployments on port 443 or poke a hole in the firewall for the port being used. It took me quite some time to work this out as I was not using 443 originally, failure policy was at the ValidatingWebhookConfiguration beta resource default of “Ignore” and I did not have access to master logs in GKE.

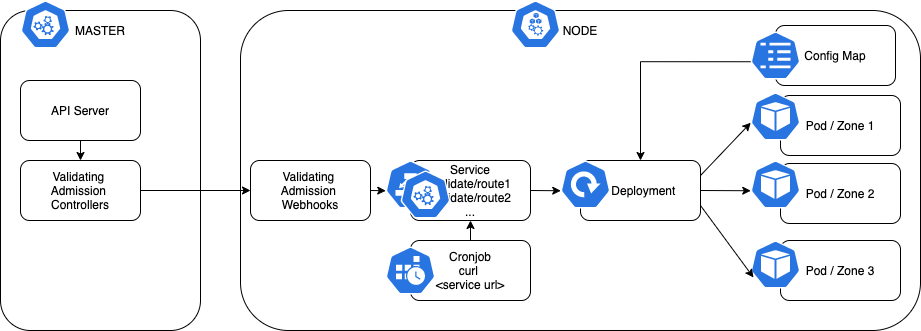

Deployment Architecture

In summary, to help picture things, this is what the deployment architecture for the described suite of webhooks looks like.

References

- Kubernetes documentation – https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/

- Banzai Cloud article – https://banzaicloud.com/blog/k8s-admission-webhooks/

- open-policy-agent/gatekeeper – https://github.com/open-policy-agent/gatekeeper

- Guaranteed Scheduling – https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

- GKE Firewalling – https://medium.com/r/?url=https%3A%2F%2Fcloud.google.com%2Fkubernetes-engine%2Fdocs%2Fhow-to%2Fprivate-clusters%23add_firewall_rules